Understanding Faceoilbase.FP16: The Half-Precision Floating-Point Format

FP16, short for “half-precision floating-point,” is a data format used in computing to represent floating-point numbers with 16 bits, following the IEEE 754 standard. While traditional computing often relies on 32-bit (single precision) or 64-bit (double precision) floating-point numbers, FP16 has carved out a valuable niche, particularly in fields like machine learning, graphics processing, and high-performance computing.

FP16 is a simplified, compact representation that enables faster processing speeds, reduced memory footprint, and more efficient data handling, making it ideal for AI and real-time graphics applications.

What is the IEEE 754 Standard?

The IEEE 754 standard provides a set format for representing floating-point numbers, ensuring compatibility and accuracy across different computing systems. IEEE 754 defines several floating-point formats, including:

- Single precision (FP32) – 32 bits

- Double precision (FP64) – 64 bits

- Half precision (FP16) – 16 bits

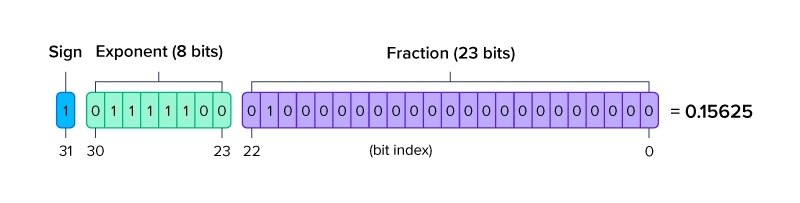

In FP16, the bit allocation consists of:

- 1 sign bit

- 5 exponent bits

- 10 mantissa (fraction) bits

This configuration allows FP16 to represent a wide range of numbers with a trade-off in precision. The primary benefit here is computational efficiency, as operations involving FP16 are much faster than those involving FP32 or FP64.

Why FP16? The Benefits of Half-Precision Floating Points

FP16’s compact data structure comes with several significant advantages:

1. Enhanced Processing Speed

- FP16 enables faster computations because it uses fewer bits, allowing for quicker data transfers and reduced memory usage.

- This is crucial in applications where speed is prioritized, such as video games, image processing, and AI-driven decision-making.

2. Reduced Memory Footprint

- Since FP16 uses only 16 bits, it consumes half the memory of FP32.

- In machine learning models with vast datasets, reducing memory footprint is critical to allow more data to be processed simultaneously.

3. Energy Efficiency

- Reduced memory and faster data processing also lead to lower power consumption.

- This is essential for mobile devices and edge devices that rely on efficient energy usage.

How FP16 is Used in Deep Learning and AI

In deep learning, FP16 is widely adopted because of its computational efficiency. Some advantages of using FP16 in machine learning are:

1. Training and Inference Optimization

- By representing weights and activations in FP16, neural networks can train faster without a significant loss of accuracy.

- Many GPUs and AI accelerators support mixed precision training, where critical computations are in FP32 or FP64, but less sensitive parts are in FP16 to boost performance.

2. Memory Optimization for Larger Models

- As models grow, FP16 allows researchers to fit larger models within the same memory constraints, facilitating more complex and powerful architectures.

- FP16’s reduced memory requirement enables faster data loading and more efficient parallel processing.

3. Faster Deployment in Real-Time Applications

- Real-time applications, such as autonomous vehicles or facial recognition, benefit from FP16’s speed, allowing faster inference with lower latency.

FP16 in Graphics Processing

Graphics processing has been another primary beneficiary of FP16. With 3D rendering, virtual reality, and other graphics-intensive tasks, FP16 allows for:

- Efficient shading and rendering of 3D models, enhancing the real-time user experience.

- Smoother frame rates and less demanding processing requirements in visual applications like gaming and VR, which can make use of FP16 for quick rendering and shading without impacting overall quality.

Comparing FP16 with FP32 and FP64

| Precision Format | Bit Allocation | Common Uses | Pros | Cons |

|---|---|---|---|---|

| FP16 | 16 bits | AI, graphics, gaming | Faster, less memory | Lower precision |

| FP32 | 32 bits | Standard computing | Balanced precision | Moderate memory usage |

| FP64 | 64 bits | Scientific computations | High precision | High memory & slower |

FP32 and FP64 provide higher precision but are slower and more resource-intensive, making FP16 an appealing compromise for tasks where speed and resource usage are more critical than extreme accuracy.

The Trade-offs of Using FP16

FP16’s main drawback is its lower precision. While this isn’t always an issue in neural networks, where small discrepancies do not significantly impact results, it may cause problems in cases requiring exact calculations. For this reason, FP16 is generally used alongside FP32 or FP64 in mixed-precision setups, combining FP16’s speed with FP32’s reliability.

Situations Unsuitable for FP16

- Scientific calculations that demand high precision.

- Financial computations where exact decimal places are crucial.

- Engineering simulations where minute changes could impact results.

Conclusion

FP16, the half-precision floating-point format, is a powerful tool in modern computing, especially when speed, memory efficiency, and energy consumption are priorities. With applications ranging from machine learning to gaming graphics, FP16’s simplified format allows for a balance of performance and memory use, making it invaluable in fields where vast data sets are handled rapidly.

Frequently Asked Questions (FAQs)

1. What is FP16 used for?

FP16 is primarily used in deep learning, graphics processing, and any application where speed and efficiency are more critical than precision.

2. Why is FP16 popular in AI?

FP16 is popular in AI because it allows for faster processing and reduced memory usage, essential for training large neural networks and performing quick inferences in real-time.

3. How does FP16 differ from FP32?

FP16 uses only 16 bits compared to FP32’s 32 bits, which means FP16 is faster and less memory-intensive but less precise.

4. Can I use FP16 for scientific computations?

FP16 is generally not recommended for scientific computations that require high precision; FP32 or FP64 would be more appropriate.

5. Is FP16 suitable for gaming graphics?

Yes, FP16 is often used in gaming graphics to render images faster and use less memory, which helps in achieving higher frame rates and better real-time visuals.